“Cyberspecies Proximity” (2020) explores what it will mean to share our sidewalks, elevators and transport systems in close proximity with mobile intelligent robots. Anna Dumitriu and Alex May undertook a STARTS Residency to develop their explorations of robotic movement through an in depth collaboration with the Human Robot Co-Mobility project led by the New Technologies team at Schindler.

The Cyberspecies Proximity Robot

The Cyberspecies Proximity Robot combines the way-finding technologies used in delivery and maintenance robots with an ability to communicate and manipulate our emotions through body-language, embodied in a delicate humanoid form. The work challenges audiences to confront the technological, ethical, and societal questions raised by the advent of urban socially-aware robots.

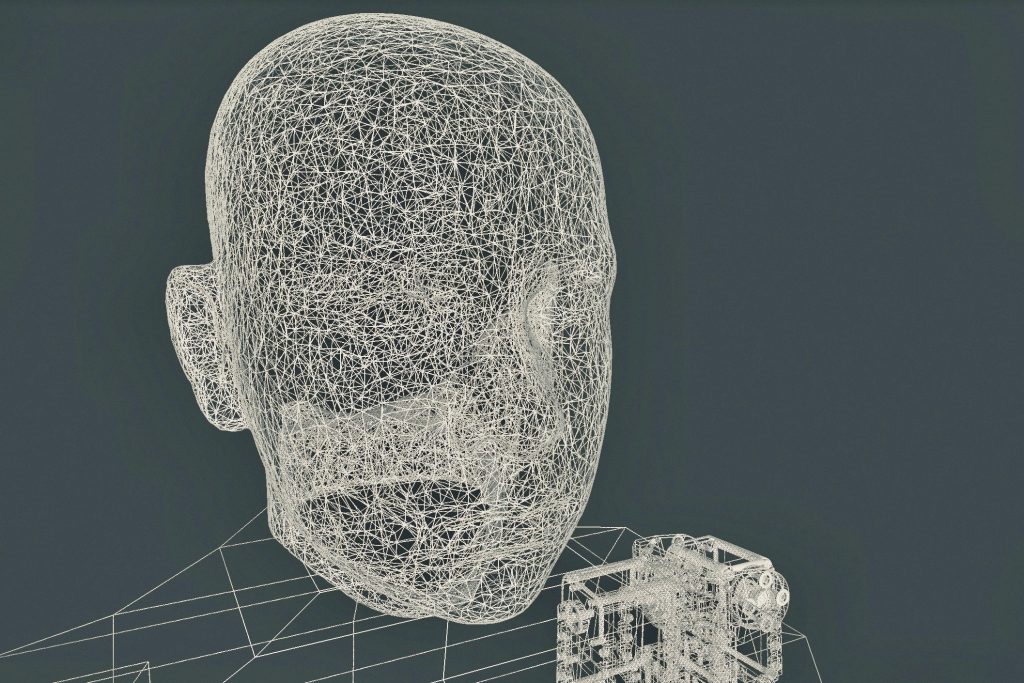

The robot is able to move around an exhibition space using a predefined map created using SLAM technology combined with an Intel RealSense Tracking Camera sensor. It reacts and responds to the body language of audience members through a multi-layered face, skeleton, body and movement tracking algorithm connected to an Intel RealSense Depth Camera sensor. The artwork was programmed in C++ and FUGIO, the Open Source Visual Programming System created by Alex May.

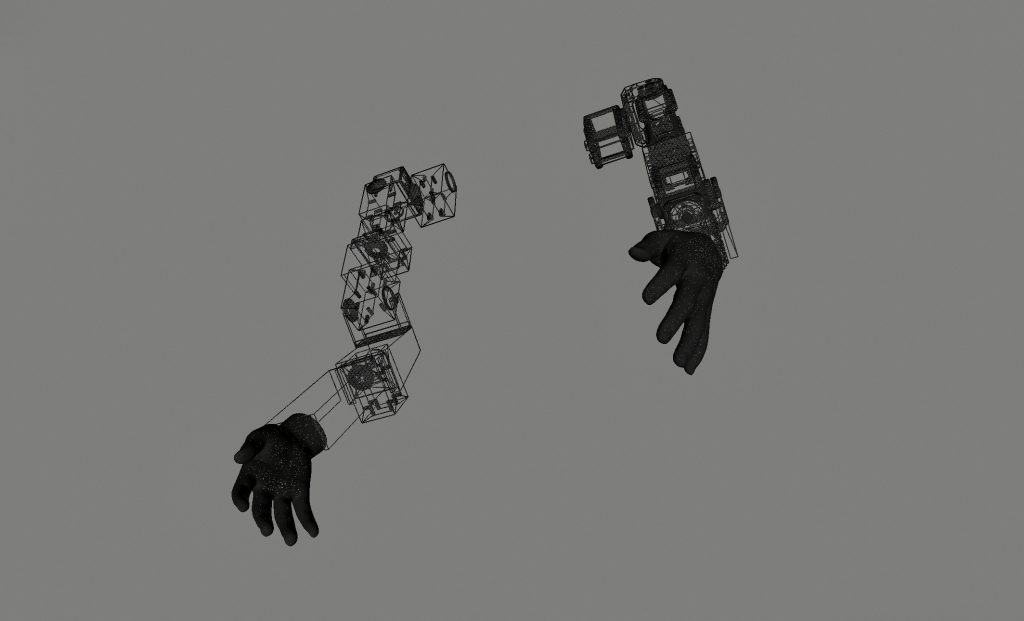

The small and fragile humanoid form is dressed in the clothes of a worker; its frail and insignificant body reminds us of the social groups that will be most affected by future automation. The robot’s head and hands are made from 3D printed grey PLA and it intentionally avoids categorizations of race and gender. There is extensive research into the relationship of robot appearance and social biases with the great majority of robots being white. The role of the robot is often related to gender bias also with personal assistant and care robots being predominantly ‘female’. In “Cyberspecies Proximity” the artists have sought to problematize this issue within both the design and engineering communities and kick start debates on the unrecognized biases in common design practices.

The robot can be exhibited in a gallery or museum exhibition setting where it will roam around a predefined area using the Intel SLAM sensor to localize itself. It will look at the audience around it, using its RGB and depth cameras to search for poses, faces, bodies, movement and other interesting features using a combination of computer vision and machine learning algorithms working together as a hierarchical system for directing its attention and gaze. It will approach audience members and physically ‘communicate’ with them through movement, tilting its head, and responding to the poses of audience members with its own body language poses. The robot does not mimic or mirror the poses of audience members but rather recognizes the various ‘meanings’ of poses and then reacts with an appropriate pose of its own, from an extensive pre-defined library of poses and responses.

The project forces us to consider issues of ownership of public spaces as well as the broader ethical implications of how we design robots and behave towards them. The work challenges audiences to confront the technological, ethical, and societal questions raised by the advent of urban socially-aware robots.

Cyberspecies Proximity: Digital Twin

The artists have also been inspired by the methodologies of the construction industry and have developed a ‘digital twin’ of the robot, a virtual screen/wall-based version that can be exhibited in settings where a mobile robot is not practical. The digital twin is an accurate virtual model of the physical robot, created using the same 3D CAD assets used to build the robot, including precision models of all the motors in their various forms, the metal frame underpinning the form and the 3D designs of the head and hands. The digital twin also works with an Intel RealSense Camera sensor and uses exactly the same code base as the physical robot. Cyberspecies Proximity: Digital Twin version would also be suitable to be exhibited within an elevator space in the form of a performative (confined and time-limited) and interactive digital installation exploring human and robot interaction and co-mobility.

Dumitriu and May are pioneers in creating robotic artworks that performatively explore our relationships to new technologies, from HARR1, their constantly moving humanoid robot which exhibits body language and boredom, to Antisocial Swarm Robots which make explicit the human need to project life-like behaviour on robots and explore our audience’s inability to deconstruct even the simplest algorithms.

Anna Dumitriu and Alex May were interviewed about their residency with the company by Schindler in “Sharing a Space with a Robot” in May 2020.

The artists are worked with Lucas Evers at Waag Society as producer to disseminate the final artwork through future exhibitions and events and the sharing of processes and methodologies.

Cyberspecies Proximity is a project by Anna Dumitriu and Alex May with the support of the VERTIGO project as part of the STARTS program of the European Commission, based on technological elements from Schindler, with the support of Stichting Waag Society.

Exhibitions and events

The Cyberspecies Proximity Robot and Cyberspecies Proximity: Digital Twin was shown as part of BioMedia: The Age of Media with Life-like Behaviour at ZKM in Karlsruhe (Germany) from 4th December 2021 to 28th August 2022.

Cyberspecies Proximity: Digital Twin was shown as part of BIOMEDIA: L’ÈRE DES MÉDIAS SEMBLABLES À LA VIE at Centre des arts Enghien-les-Bains in France from 13th May – 10th July 2022.

The Cyberspecies Proximity Robot premiered at CENTQUATRE in Paris (France) from 28th February – 1st March 2020 as part of the STARTS Residency Days.

Anna Dumitriu and Alex May discussed their research in collaboration with Schindler and presented their robotic works at an online S+T+ARTS talk for Waag on 2nd July at 7:30pm CEST. After the presentation there was a Q&A moderated by Lucas Evers.

The artists gave a talk in the new Schindler Auditorium in conversation with Schindler Chairman, Silvio Napoli and Nicholas Henchoz from the EPFL-ECAL Lab.